Welcome to the weird world of driverless cars and ethical robots

Try this thought experiment: Your small children are strapped into the child seats in the back of the car and you are driving down a country lane. Now you are approaching a bend in the lane, and a cat darts out into the road. If you swerve to avoid the cat you know that you could collide with an oncoming vehicle. If you break hard you may just miss the cat but the on-board supercomputer that is your human brain tells you that you might smash into the tree on the bend.

The cat gets it. That was what you chose.

And that’s an ethical dilemma. You decided in a flash – no sanctimonious deliberation or hand-wringing here – that killing the cat was the least appalling option, given all the other many scenarios, some of which involved probable injury to your children. After all, you might have swerved and not hit an oncoming vehicle (because there wasn’t one coming round the corner at that precise moment). But you didn’t know if there was an oncoming vehicle or not; just that there was a real possibility of one.

So, in that split second, you made an immensely complex evaluation of the likely outcomes of the situation based on all known facts and the likely probability of a number of unknowns as well. And human beings make highly complex decisions of this kind almost every day. You are remarkable; and it has taken evolution several hundred million years to craft an instrument with the extraordinary processing power of your brain hardware – not to mention several millennia of cultural software to guide you as to how to use it.

Now let’s take this thought experiment one stage further. This time it’s not a cat that darts into the road… but a child…

There is obviously no right or wrong answer to this. I honestly don’t know what I would do. But you or I driving that car would definitely make a decision in the next millisecond or so, for good or ill. (Thinking about this, I am going to dawdle henceforth on bendy country lanes like a nonagenarian driver on a Sunday afternoon. But the thought experiment is still valid.)

So now comes the point of my in car ethical dilemma. The pioneers of driverless cars like Google’s (Alphabet Inc. (NASDAQ:GOOGL)) Waymo have found it relatively straightforward to programme machines to accelerate along empty highways, change gear optimally (so as to minimise fuel consumption) and even perform three-point turns in tight spaces. The really challenging part, though, is now to programme cars to make instant decisions which involve evaluating the possible outcomes of adverse events (like car crashes) and making ethical choices as to what is the preferred outcome.

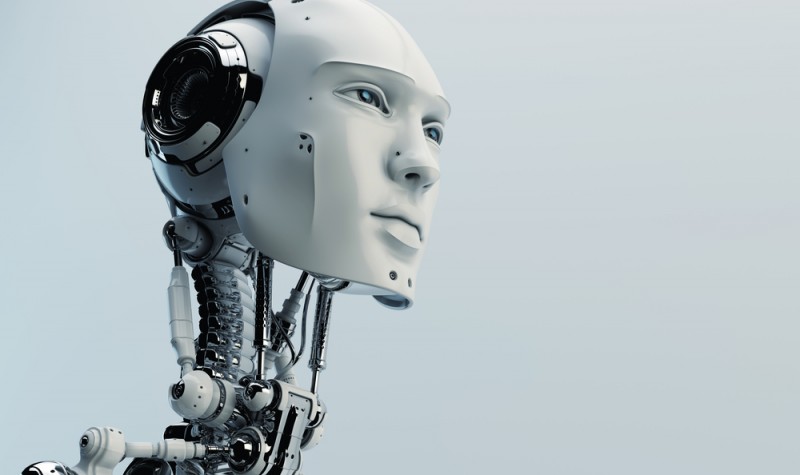

Welcome to the brave new world of ethical robots. And if robots can truly be said to have critical faculties even which simulate ethical thought processes, then surely the world of Artificial Intelligence (AI) has already dawned.

Meet the ethics machines

Self-driving vehicles will have to learn to think like we do – mechanistically and judgmentally – in order to drive as we do. By extension, the skills that Google and others are developing to facilitate driverless cars will push the boundaries of “Artificial Intelligence” (AI).

Now cars already use sensors, radar and cameras that give them a picture of the world around them. They also have systems that enable them to cruise down the motorway with optimal efficiency or advance slowly in a traffic jam, for example Tesla Motors’ (NASDAQ:TSLA) Autopilot, or Mercedes-Benz’s (Daimler AG (FRA:DAI)) Steering Assist. Or again, Audi (FRA:NSU) has a system which can detect hazards in poor visibility using thermal imagery. But giving cars the capacity to make ethical choices is another matter altogether. In fact it requires a remarkable marriage between engineering and philosophy.

According to New Scientist[i] Google’s self-driving cars will have eight sensors, Uber’s driverless taxis will have 24 and Tesla’s new range will have 21. The cars’ computers combine all the data received from the sensors simultaneously into a “stream” just as you combine all the data received from your eyes, ears and nerve endings without even thinking about it.

The next stage is to give computers the capacity to extrapolate. So if we see a car driving along the road which is then obscured by a parked bus, we assume that it will re-appear a second or two later. Computers have to be told this – unless they are capable of machine learning – otherwise, they just assume that car has disappeared in a puff of smoke.

…giving machines some kind of moral compass may mean that, come the day, they are less inclined to turn on us and kill us.

Then you give the machine rules of conduct, just as we are in childhood (most of us, anyway). So: avoid contact with humans, animals and inanimate objects – in that order. (It is not good to run over a bollard – but much better than running over a human being). That sounds sensible; but the problem is that it is impossible for the programmers to anticipate all possible future accident scenarios requiring rule-based responses.

So it will probably be necessary to release rookie robot cars onto the roads but which will be capable of machine learning from their experiences on the road. Just as humans start out as learner-drivers but become proficient over time – because we become better at anticipating other drivers’ behaviour, an activity that philosophers call theory or mind. This involves, for example, working out what drivers’ facial expressions indicate about their state of mind. So far, however, machines have very little theory of mind.

One way of solving this problem might be to ban human drivers altogether so that driverless cars only have to anticipate the behaviour of other driverless cars just like themselves. That would not be welcome for many of us who still enjoy driving.

Other applications – defence?

One can easily imagine why ethical machines might need to be used in the realm of defence. A drone could be programmed to take out a terrorist cell – but only where certain criteria have been met. So it would only become offensive when there is (a) clear evidence that the perpetrators are about to strike and (b) that, if they do so, there would be serious risk of the loss of innocent life. (If you have seen the film Eye in the Sky you will know this is already on the agenda).

Now I know that this thought will leave many people feeling uneasy – including me. But giving machines some kind of moral compass may mean that, come the day, they are less inclined to turn on us and kill us.

The lawyers are already on the case

If you think this is all science fiction (or fantasy) consider that yesterday (12 January) the Legal Affairs Committee of the European Parliament called for the development of “a robust European legal framework” to ensure that robots comply with our own ethical standards[ii]. The Committee also called for a voluntary ethical code of conduct to regulate who would be accountable for the social, environmental and human health impacts of robotics. They recommended that robot designers include “kill” switches so that robots can be turned off swiftly if they go ape.

The MEPs note that harmonised rules are urgently needed for self-driving cars. They called for an obligatory insurance scheme and a fund to ensure that victims are fully compensated in cases of future accidents caused by driverless cars. They think that, long-term, we need to create a specific legal status of “electronic persons” for the most sophisticated autonomous robots, so as to clarify responsibility in cases of mishap.

Last year the US Department of Transportation produced the first Federal Automated Vehicles Policy. Only Apple Inc. (NASDAQ:AAPL) has provided feedback on the Policy so far – and in so doing unwittingly confirmed that it too is working on self-driving cars.

Future problems

Even if we can come to trust those reliable ethical machines, there are still nightmare scenarios ahead of us. What would happen if unscrupulous humans hacked into a fleet of self-driving cars and proceeded to re-model their ethics? And should the algorithms that determine an ethical machine’s behaviour not be put into the public domain so that everybody can evaluate them equally? And, in any case, whose moral code should be reflected in the programming? Would Sharia-compliant driverless cars behave differently from Buddhist ones?

We have to face up to the fact that these machines, ethical or not, will occasionally make errors, and that sooner or later, someone, somewhere will get killed. But then the fatality rate for self-driving cars, long-term, is likely to be far less than that for cars driven by humans. The ethical machines will be vegan teetotallers who never, ever lose their tempers behind the wheel. They will never be distracted by reading a text message or being nagged by their other halves, and will never fall asleep at the wheel.

But not everyone thinks driverless cars will make life better. The Spectator’s Toby Young has argued that driverless cars will promote a shift away from public transport towards more car and lorry journeys[iii]. He quotes Dr Zia Wadud of the-Faculty of Engineering at the University of Leeds who estimates that once driverless cars become fully operational in about 20 years’ time we will see a 60 percent increase in road usage. That means we will have to build even more roads.

Driverless cars will be slower, more expensive and socially divisive, says Toby. That’s told you. But with Google, Apple, Tesla, Toyota (TYO:7203), Daimler and others investing like crazy in this technology, whatever our objections, it’s bound to happen anyway. On the other side of the transition we shall awake to find ourselves living in a world of intelligent, and ethically inclined, machines.

Next up: robot clergymen.

[i] See Auto correct by Sandy Ong, New Scientist, 07 January 2017, page 36.

[ii] See: http://www.europarl.europa.eu/news/en/news-room/20170110IPR57613/robots-legal-affairs-committee-calls-for-eu-wide-rules

[iii] See: http://www.spectator.co.uk/2016/10/how-driverless-cars-will-make-your-life-worse/

There will be fewer cars on the roads, because fewer people will own a car and just order one on apps when needed.

Public transport will not disappear , it will be driverless trains, driverless electric buses and so on in the mix. Plus self driving cars can travel closer together, increasing road volume safely.